Threat actors have embraced artificial intelligence (AI) for phishing, deepfakes, malware generation, content localization, and more. This week we’re looking at how they’re using it for credential theft to gain access to high-value networks.

Threat actors have embraced artificial intelligence (AI) for phishing, deepfakes, malware generation, content localization, and more. This week we’re looking at how they’re using it for credential theft to gain access to high-value networks.

Stolen credentials are a goldmine for cybercriminals, especially if the credentials are current, working sets of usernames and passwords. Threat actors use these credentials to gain access to systems or take over accounts, with a reduced risk of triggering a threat alert. Once the threat actor has gained access to a system, he usually begins network reconnaissance, privilege escalation, data exfiltration, and other tasks that position him for the next steps. Depending on the threat actor and the victim, the next step could be the start of a ransomware attack or the establishment of an advanced persistent threat (APT) like Volt Typhoon.

The time from this initial system access to the discovery of the threat is called ‘dwell time.’ It’s easier for attackers to extend their dwell time in a network if they can blend in with normal network traffic. Stolen credentials make this much easier for them.

Password-based attacks

Working credential sets aren’t the only way for a criminal to access a system. There are attacks that can compromise remote access points or rapidly ‘guess’ credentials until they get a working combination. Threat actors also like it when network administrators leave default credentials in place. Dwell times are often shorter when the initial access involves brute force attacks, exploit kits, or other events or patterns in the system that are outside of normal, expected behavior. Advanced security systems will identify these events and trigger an investigation. A shorter dwell time usually means less data stolen and less damage to the network.

Credential theft and unauthorized system access are gateways to larger attacks, and threat actors are using AI to make those gateways more accessible. We’ve talked about some of these attacks already:

Phishing and social engineering: AI can generate phishing emails that mimic the language and style of legitimate communications. These AI-generated emails can be very convincing, especially when personalized or based on an analysis of data that is gathered beforehand. These attacks can capture credentials or trick an authorized user into providing access to the attacker.

Deepfakes: Threat actors use AI to create videos or audio clips that impersonate executives or other trusted figures. In 2019, a threat actor used a voice-phishing (vishing) attack to convince an employee to transfer over $243,000 to a fake supplier. In 2024, an entire group of deepfake bank employees used a Zoom call to fool a live employee into transferring $35 million to multiple accounts. Threat actors often combine AI-generated phishing emails and social engineering tactics with deepfake attacks.

Malware development: Threat actors use generative AI (GenAI) to create ‘smart’ malware, which can change its code to evade detection by traditional security systems. This makes it more challenging for security teams to identify and neutralize threats. Malware is commonly used to steal credentials and other information from infected systems.

Automated reconnaissance: AI can process data quickly, which means threat actors can find targets and vulnerabilities quickly. Here are some examples of how these attackers use AI:

- Scanning and mapping networks, identifying active hosts, open ports, running services, and network topologies.

- Gathering information from public sources like search engines, social media, and code repositories for open-source intelligence (OSINT). Threat actors often use this information for phishing and social engineering attacks.

- Scanning code repositories, websites, and applications for misconfiguration and other vulnerabilities.

- Deploying AI chatbots that automate social engineering attacks like phishing or pretexting.

High-value credential sets

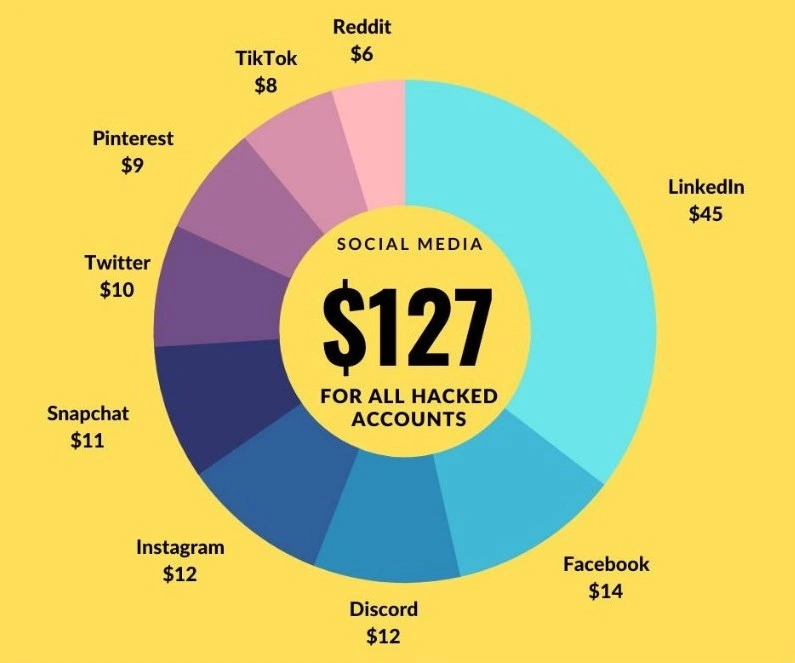

Attackers have many uses for stolen credentials. We’ve already mentioned the value of being able to log in to a system like an authorized user. Another popular use for credential sets is to sell them on cybercrime forums so that other threat actors can use them. In 2023, a social media research company revealed that credentials and other account information can sell for $6 to $45 per account.

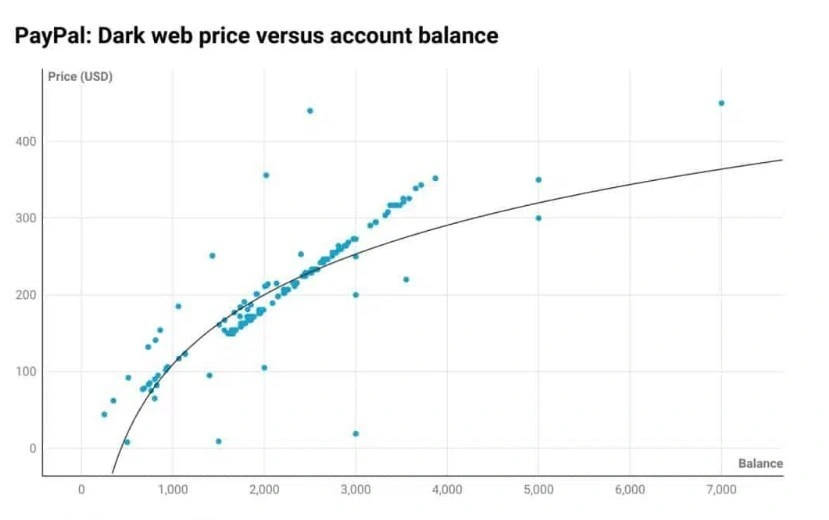

A separate report published later that year reveals that PayPal account credentials hold significantly more value than a social media, email, or credit card account. The prices for PayPal credentials are primarily determined by the balance in the account. This study found the average price of a PayPal account was $196.50 for an average account balance of $2,133.61.

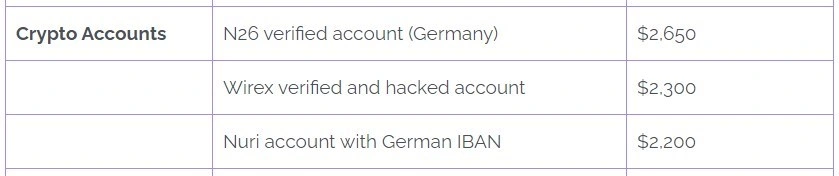

A third study in 2023 found that credential sets for some cryptocurrency and payment processing accounts might cost thousands of dollars.

The methodologies of the three studies overlap but are not identical. You’ll probably find some differences in numbers if you look closely, but these examples show the value of credentials.

Attackers frequently use credentials stolen in one breach to attack another service. There are three common methods for these attacks, and AI can automate all of them:

Credential stuffing: Threat actors use the billions of stolen credentials available on the dark web to gain access to multiple accounts. This is one of the most prevalent types of password attacks. It’s effective because so many people use the same username and password pairs for multiple services.

Password spraying: This attack is an automated attempt to match a few common passwords across many known usernames. Threat actors find this attack most effective against cloud services, remote access points, and single sign-on providers.

Brute force attacks: These are automated trial-and-error processes that guess passwords using all combinations of characters. No known credential information is used in this attack. The attacks often use known default usernames and passwords and lists of the most common passwords.

Protect yourself

Defending yourself and your company from AI-powered credential theft involves a combination of strong security policies, user education and awareness, and the use of advanced security solutions.

| Protection Measure | Description |

|---|---|

| Use strong, unique passwords | Use a password manager to generate and store complex passwords and never use the same password on multiple accounts. |

| Enable multi-factor authentication (MFA) | MFA combines something you know with something you have. A username and password (something you know) set is not enough to login. You also need a device or hardware token (something you have) to confirm your login request. |

| Monitor for phishing attacks | Verify email sources and use caution with emails requesting sensitive information or encouraging you to click on a link. |

| Update and patch systems | AI-powered network scans look for vulnerabilities in your applications and network devices.Keep software updated and apply security patches as soon as possible. |

| Use AI-powered security tools | Use comprehensive network and endpoint protection with AI-powered security that can detect unusual activity or unexpected network traffic. |

| Educate yourself and others | Organize or advocate for security awareness campaigns that teach employees how to identify and defend against email threats, AI-powered attacks, and social engineering. |

| Monitor account activity | Regularly check your accounts and set up automated alerts to notify you of unusual activity. |

| Use secure connections | Avoid sending or accessing sensitive information over public networks and always ensure you are using encrypted communication (HTTPS). |

| Adopt zero-trust principles | Zero trust continuously verifies the identity and trustworthiness of devices and users. Even when threat actors have stolen working credentials, zero trust may deny the login based on the type of device, time of day, or location of the user. |

You can get more details on password-based attacks in this blog: Password protection in the age of AI.

For more information on AI and cybersecurity, check out Barracuda’s eBook, Securing tomorrow:A CISO’s guide to the role of AI in cybersecurity.

Photo: Lightspring / Shutterstock