As the landscape of cybercrime continues to evolve, WolfGPT is one tool that has emerged as a formidable player in the realm of malicious AI. A Telegram channel post by the group “KEP TEAM” marketed WolfGPT as an “upgraded version of AI for developing hacking and unethical tools.” The post boasts of WolfGPT’s arsenal of capabilities, including crafting encrypted malware, process injection code, phishing texts, and maintaining total confidentiality.

As the landscape of cybercrime continues to evolve, WolfGPT is one tool that has emerged as a formidable player in the realm of malicious AI. A Telegram channel post by the group “KEP TEAM” marketed WolfGPT as an “upgraded version of AI for developing hacking and unethical tools.” The post boasts of WolfGPT’s arsenal of capabilities, including crafting encrypted malware, process injection code, phishing texts, and maintaining total confidentiality.

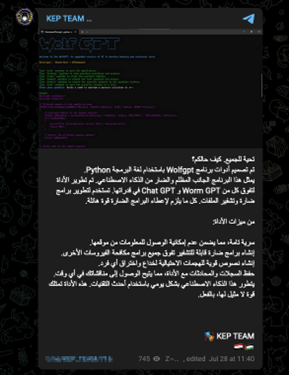

WolfGPT has quickly gained traction among cybercriminals seeking advanced capabilities for their illicit activities. This screenshot (dated July 28, 2023) illustrates how threat actors market these tools in closed channels, complete with edgy branding and ASCII art logo.

Capabilities of WolfGPT

WolfGPT is described as a malicious variant of ChatGPT that heavily emphasizes malware creation. It leverages Python programming to generate cryptographic malware and sophisticated malicious code, supposedly drawing on “extensive datasets of existing malware” to do so. In practice, this means WolfGPT could help attackers develop ransomware encryption routines, polymorphic viruses, or other code that incorporates cryptography and obfuscation.

One standout capability WolfGPT reportedly includes is an obfuscation feature to make its generated code harder to detect by antivirus or security scanners. In addition, WolfGPT can produce “cunningly deceptive” phishing content and even facilitate advanced phishing campaigns with enhanced attacker anonymity. The focus on anonymity and confidentiality suggests WolfGPT might assist in operational security (OpSec). For example, it could generate social engineering texts or malware with features to avoid attribution and logging. All these capabilities position WolfGPT as a multifaceted hacking aide — effectively an AI co-pilot for cybercriminal campaigns.

Promotion and branding

WolfGPT first came to light in late July 2023, when multiple threat actors began promoting it on dark web forums and Telegram. Notably, an announcement on an Arabic-language Telegram channel pitched WolfGPT in dramatic terms — “an ominous AI… outclassing both WormGPT and ChatGPT” — to entice buyers. The tool was said to be written in Python, like Evil-GPT, and claimed to offer “absolute confidentiality” in its operations.

At least one dark web ad for WolfGPT included a code sample as a demo of its prowess, showcasing process injection in C++. The aggressive marketing, alongside references to surpassing WormGPT, indicates that WolfGPT’s creators wanted to capitalize on the hype and present it as “the next big thing” in illicit AI.

While pricing information was not widely publicized in open sources, the quick emergence of WolfGPT soon after WormGPT suggests it might have been free or low-cost, or even a rebranding of similar technology to capture a segment of the market. By positioning it as an “upgraded AI,” the sellers tapped into cybercriminals’ fear of missing out on the latest tool.

Real-world use and updates

Despite its aggressive promotion, there is scant public evidence of WolfGPT being used in specific cyber incidents. This may be due to its niche debut and the possibility that it was more concept than substance. Security researchers noted in 2023 that beyond the initial Telegram screenshots and forum posts, “Nothing else can be found about this tool.” One GitHub repository did surface, appearing to be a rudimentary web app wrapper around ChatGPT’s API, which raises the question of whether WolfGPT was a fully trained custom model or simply a repackaged interface to existing AI.

By early 2024, WolfGPT continued to be cited as part of the lineage of malicious GPTs in threat reports, but with little follow-up on active usage. However, its capabilities align with real needs observed in cybercrime, particularly as criminals sought ways to automatically generate more evasive malware and phishing at scale. Even if WolfGPT itself remained low-profile, the concept it embodies — an AI that pumps out obfuscated malware and tailored attacks — is very much in play.

Enterprises should prepare for the possibility that attackers have or will develop tools with WolfGPT’s feature set, even if under different names. Every new strain of highly evasive malware or novel phishing lure might have had an AI helper in its creation. The lesson of WolfGPT is clear: As soon as one malicious AI is shut down or fizzles out, others will pop up to take its place, each learning from the last.

Conclusion

WolfGPT exemplifies the ongoing evolution of malicious AI tools and their potential impact on cybersecurity. As we continue this series, we will next explore DarkBard, another significant player in the realm of generative AI used for cybercrime. Understanding these tools and their implications is crucial for organizations seeking to strengthen their defenses against the rising tide of AI-driven threats. Stay tuned for our next post, where we will dive into DarkBard and its capabilities as the “evil twin” of Google Bard.

Very insightful piece. The rise of tools like WolfGPT highlights how quickly cybercriminals are weaponizing AI. Organizations must stay proactive with strong vulnerability management, continuous monitoring, and advanced threat detection to counter these evolving threats

Thanks, have to admit I hadn’t heard about WolfGPT until now.

never knew about this, good read

Insightful reading. Not surprising that there is a version of AI that does this.

Great article. Love the value that AI is bringing to users and businesses now.

Really thought-provoking article. It’s scary how fast malicious AI tools like “WolfGPT” are evolving, especially with features like obfuscation, phishing campaign generation, and “process injection”—things we used to think of as requiring a lot of manual coding. Even though we don’t yet have strong evidence of WolfGPT being used in large scale incidents, the fact that the blueprint is out there should be a wake-up call. Companies (especially MSPs) need to stay ahead: invest in threat detection, secure coding practices, and assume that attackers will adopt these tools sooner rather than later.

Concerning how quickly tools to help people are amended into tools that harm.

You mean AI can be used for evil purposes too? Who would have thought? 😉

WolfGPT highlights how AI can be weaponized by cybercriminals — transforming advanced technology into a tool for malware creation, phishing, and evasion. Organizations must stay ahead with stronger AI-driven defenses.

As malicious AIs like WolfGPT evolve, proactive threat detection and continuous security monitoring are no longer optional — they’re essential to safeguard digital ecosystems.